MIT Model Automates AI for Medical Decision-Making

Over the past two years, artificial intelligence has proven to be a transformative technology in the medical device industry, and one of the biggest promises of AI is the technology’s role in predictive analytics or medical decision-making.

But in order to use AI to guide diagnosis or treatment decisions, someone has to train datasets and then weed out the features in the datasets that will be important for making predictions. This process, known as feature engineering, is often laborious and expensive, especially with the popularity of wearable sensors, according to a recent MIT news story.

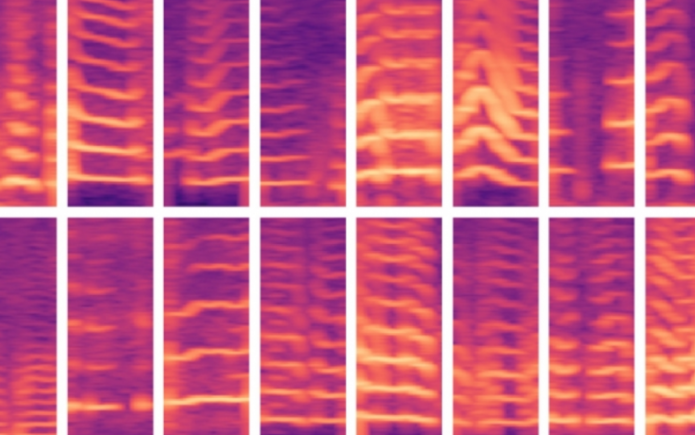

That’s why MIT researchers set out to demonstrate a machine learning model that automatically learns features predictive of vocal cord disorders. The features were derived from a dataset of about 100 people, each with about a week’s worth of voice-monitoring data and several billion samples (a small number of subjects and a large amount of data per subject). The dataset contains signals captured from a tiny accelerometer sensor mounted on subjects’ necks. The accelerometer sent data to a smartphone, which gathered data from displacements in the accelerometer.

Sponsored Content

Medical Device Testing Regulatory Updates, Trends and Changes including : FDA, ISO and MDR

Learn the most up-to-date information about these topics from the Nelson Labs’ experts who sit on the domestic and international committees that set the standards for the industry. Come prepared with questions, as ample time for your questions will be provided at the end of each webinar.

Brought to you by Nelson Laboratories

In experiments, the model used features automatically extracted from these data to classify, with high accuracy, patients with and without vocal cord nodules, the researchers reported. The model accomplished this task without a large set of hand-labeled data.

“It’s becoming increasingly easy to collect long time-series datasets. But you have physicians that need to apply their knowledge to labeling the dataset,” said lead author Jose Javier Gonzalez Ortiz, a PhD student in the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). “We want to remove that manual part for the experts and offload all feature engineering to a machine-learning model.”

The researchers said the model can be adapted to learn patterns of any disease or condition. But the ability to detect the daily voice-usage patterns associated with vocal cord nodules is an important step in developing improved methods to prevent, diagnose, and treat the disorder, the researchers say. That could include designing new ways to identify and alert people to potentially damaging vocal behaviors.

Joining Gonzalez Ortiz on the paper is John Guttag, the Dugald C. Jackson Professor of Computer Science and Electrical Engineering and head of CSAIL’s Data Driven Inference Group; Robert Hillman, Jarrad Van Stan, and Daryush Mehta, all of Massachusetts General Hospital’s Center for Laryngeal Surgery and Voice Rehabilitation; and Marzyeh Ghassemi, an assistant professor of computer science and medicine at the University of Toronto.

“Instead of learning features that are clinically significant, a model sees patterns and says, ‘This is Sarah, and I know Sarah is healthy, and this is Peter, who has a vocal cord nodule.’ So, it’s just memorizing patterns of subjects. Then, when it sees data from Andrew, which has a new vocal usage pattern, it can’t figure out if those patterns match a classification,” Gonzalez Ortiz said.

The researchers’ next steps include monitoring how various treatments such as surgery and vocal therapy impact vocal behavior. If patients’ behaviors move from abnormal to normal over time, they’re most likely improving. The researchers also want to use a similar technique on electrocardiogram data.

SOURCE:MDDI